Enterprises are rapidly adopting AI-based Applications and have transitioned to cloud-based infrastructures, necessitating advanced tools that ensure seamless connectivity, robust security, and optimal performance.

Large Language Models (LLMs) are powerful tools in artificial intelligence designed to understand and generate human-like text based on vast amounts of data. Their applications span across various domains. Here are some critical applications of LLMs:

- Natural Language Processing (NLP)

- Customer Support and Chatbots

- Content Creation

- Code Generation and Programming Assistance

- Search Engines and Information Retrieval

- Data Analysis and Insights

This blog focuses on how Alkira is helping its customers securely access the AI LLM applications for their environment.

Challenges with LLMs

Integrating Large Language Models (LLMs) into enterprises presents unique challenges. These challenges span data privacy, performance, scalability, and regulatory compliance, each requiring careful consideration to ensure successful and secure implementation.

1. Data Privacy and Security

Enterprises handle vast amounts of sensitive data that require stringent privacy and security protocols. Integrating LLMs introduces significant risks, including:

- Data Exposure: LLMs require extensive datasets for training, which may inadvertently contain sensitive information. Ensuring this data is anonymized and secure is paramount.

- Security Measures: Integrating LLMs should include advanced security mechanisms to mitigate potential vulnerabilities and protect against adversarial attacks.

2. Performance and Scalability

LLMs are computationally intensive and can impact the performance and scalability of enterprise networks:

- Latency: Real-time enterprise applications demand low-latency solutions. LLMs, especially large models, can introduce processing delays that are detrimental to network performance.

- Scalability: Ensuring that LLMs can efficiently scale to handle large network traffic and data volumes is crucial.

3. Regulation and Compliance

Compliance with data protection regulations is a critical aspect of integrating LLMs into networking solutions:

- Adhering to Regulations: Compliance with GDPR, CCPA, and other regional data protection laws can be complex. LLMs must be trained and operated to ensure data privacy and adhere to these legal requirements.

Addressing the Above Challenges with Alkira

Alkira recognizes the significant potential of customers integrating large language models (LLMs) into their applications but also understands the critical challenges this integration presents. Alkira can employ advanced data segmentation to ensure data privacy and security, and robust security measures are in place to prevent unauthorized access and mitigate vulnerabilities.

RBAC

Below are the capabilities of the Alkira solution that customers can leverage to meet the requirements for connecting LLM-based applications:

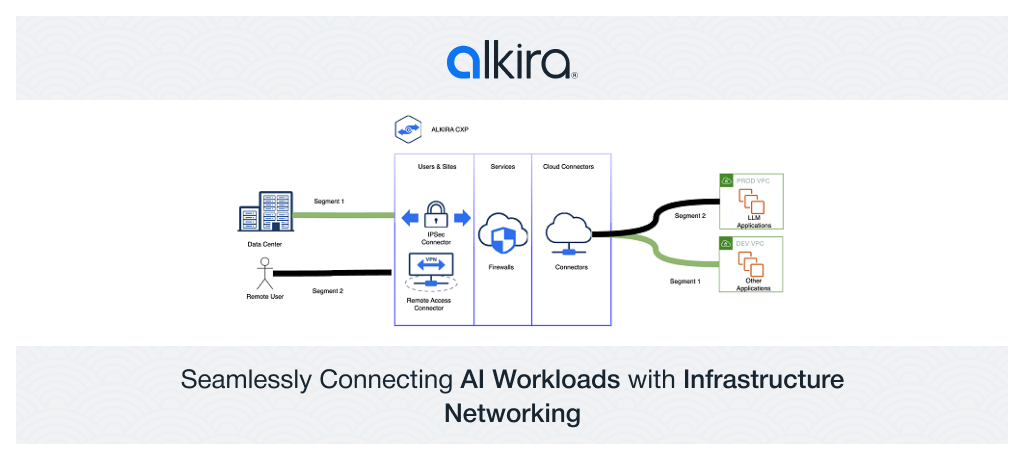

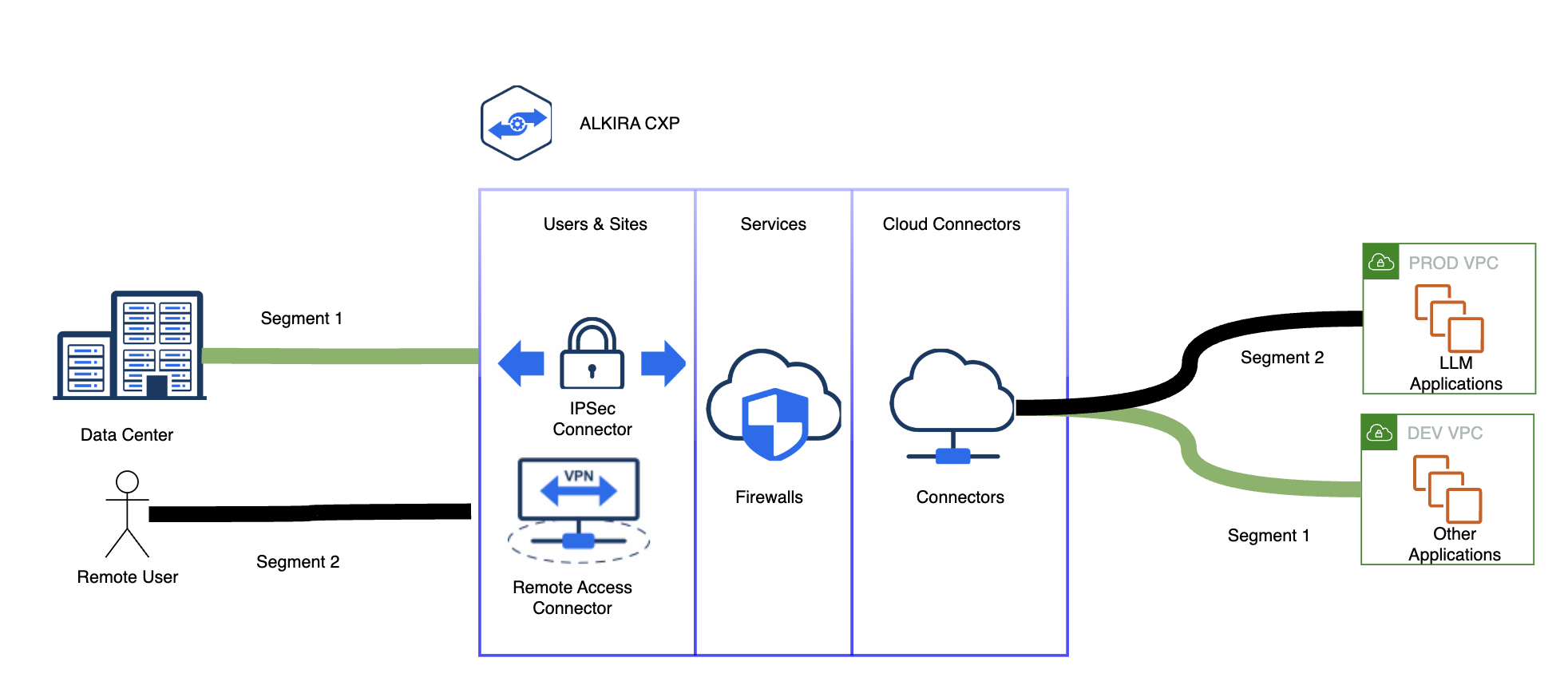

Figure 1: Isolate connectivity for LLM applications using Alkira segments

Segmentation

A segment represents a unique routing and policy space within the Alkira environment, and customers can maintain the isolation by providing access to the required applications from the relevant environments.

Refer to this blog for more details about cloud network segmentation.

Customers can leverage policy-based and role-based access on Alkira to provide required access for configuration and visibility into these resources.

Visibility

Alkira provides visibility into traffic flows destined for these applications. Customers can troubleshoot any connectivity issues; there are integrations with NetFlow.

Security

Alkira provides seamless integration with vendors like Fortinet, Check Point, and Palo Alto, as well as traffic inspection for any type of traffic flow. Customers can use these firewalls for traffic inspection and apply required security policies to allow/deny traffic based on requirements. Alkira also supports autoscaling as part of the solution, which helps scale up or down depending on the requirements.

Latency

Customers can connect to the Alkira CXP in the nearest region, depending on where the applications exist, and then leverage Alkira Backbone to have low latency while connecting to these applications. This can help with applications that are latency-sensitive and help with efficient connectivity.

Scalability

LLM-based applications can require significant bandwidth resources; depending on these requirements, the Alkira CXP can scale up without impacting traffic flows.

Conclusion

Alkira can help customers build secure connectivity to LLM-based applications while meeting scale and compliance requirements.As enterprises continue to evolve and embrace digital transformation, Alkira can be crucial in ensuring efficient, secure, and scalable network operations.

Ahmed Abeer is a Sr. Product Manager at Alkira, where he is responsible for building a best-in-class Multi-Cloud Networking and Security Product. He has been in Product Management for more than ten years in different big and small organizations. He has worked with large enterprise and service provider customers to enable LTE/5G MPLS network infrastructure, automate Layer 3 Data Center, enable Next-Gen Multi-Cloud architecture, and define customers’ Multi-Cloud strategies. Ahmed’s technical expertise in Cloud Computing and Layer 2/Layer 3 network technologies. Ahmed is a public speaker at various conferences & forums and holds a Master’s Degree in Computer Engineering

Deepesh Kumar is a Solutions Architect and product specialist in the computer networking industry with over 6 years of experience. He currently works as part of the post sales team at Alkira and focuses on working with customers to design and deploy the Alkira solution. Prior to working here , he worked at Viptela which was acquired by Cisco Systems. He holds a masters degree from San Jose State University